|

Cheng-Zhi Anna Huang 黃成之 In Fall 2024, I started a faculty position at Massachusetts Institute of Technology (MIT), with a shared position between Electrical Engineering and Computer Science (EECS) and Music and Theater Arts (MTA). For the past 8 years, I have been a researcher at Magenta in Google Brain and then Google DeepMind, working on generative models and interfaces to support human-AI partnerships in music making. I am the creator of the ML model Coconet that powered Google’s first AI Doodle, the Bach Doodle. In two days, Coconet harmonized 55 million melodies from users around the world. In 2018, I created Music Transformer, a breakthrough in generating music with long-term structure, and the first successful adaptation of the transformer architecture to music. Our ICLR paper is currently the most cited paper in music generation. I was a Canada CIFAR AI Chair at Mila, and continue to hold an adjunct professorship at University of Montreal. I was a judge then organizer for AI Song Contest 2020-22. I did my PhD at Harvard University, master’s at the MIT Media Lab, and a dual bachelor’s at University of Southern California in music composition and CS. |

|

🎵 We have multiple Postdoc positions open for Fall 2025 at MIT Music Technology, through the School of Humanities, Arts, and Social Sciences (SHASS) as well as the College of Computing. 🎵 For PhD, apply through MIT EECS (by Dec 1st). See recruiting section below for more details. |

Research InterestsI’m interested in taking an interaction-driven approach to designing Generative AI, to enable new ways of interacting with music (and AI) that can extend how we understand, learn, and create music. I aim to partner with musicians, to design for the specificity of their creative practice and tradition, which inevitably invites new ways of thinking about generative modeling and Human-AI collaboration. I propose to use neural networks (NNs) as a lens onto music, and a mirror onto our own understanding of music. I’m interested in music theories and music cognition of NNs and for NNs, to understand, regularize and calibrate their musical behaviors. I aim to work towards interpretability and explainability that is useful for musicians interacting with the AI system. I envision working with musicians to design interactive systems and visualizations that empower them to understand, debug, steer, and align the generative AI’s behavior. I’m also interested in rethinking generative AI through the lens of social reinforcement learning (RL) and multi-agent RL, to elicit creativity not through imitation but through interaction. This framework invites us to consider how game design and reward modeling can influence how agents and users interact. I envision a jam space, where musicians and agents can jam together, and researchers can swap in their own generative agents and reward models, similar to OpenAI’s Gym. The evaluation is not only on the resulting music, but also on the interactions, how well agents support other players. I’m also interested in efficient machine learning, to build instruments and agents that can run in real-time, to enable Human-AI collective improvisation. |

|

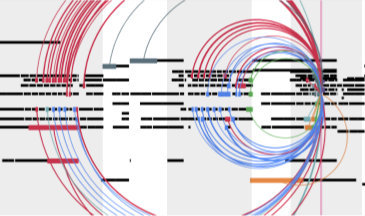

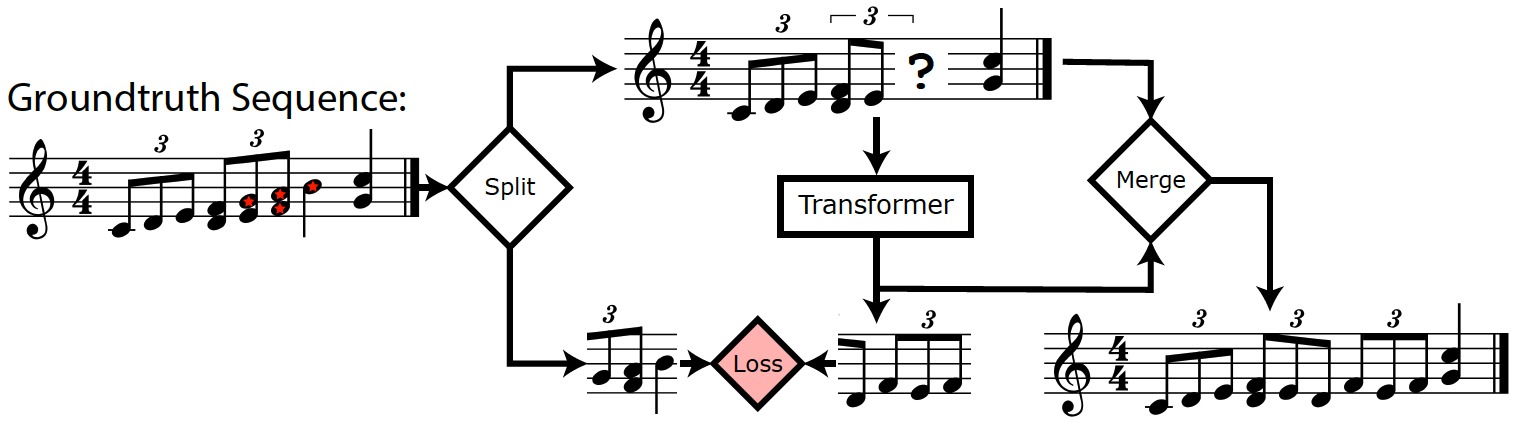

Music Transformer

Cheng-Zhi Anna Huang, Ashish Vaswani, Jakob Uszkoreit, Ian Simon, Curtis Hawthorne, Noam Shazeer, Andrew M Dai, Matthew D Hoffman, Monica Dinculescu, Douglas Eck. ICLR, 2019 |

|

The Bach Doodle: Approachable Music Composition with Machine Learning at Scale

Cheng-Zhi Anna Huang, Curtis Hawthorne, Adam Roberts, Monica Dinculescu, James Wexler, Leon Hong, Jacob Howcroft. ISMIR, 2019 |

|

Coconet: Counterpoint by Convolution

Cheng-Zhi Anna Huang, Tim Cooijmans, Adam Roberts, Aaron Courville, Douglas Eck. ISMIR, 2017 |

|

AI Song Contest: Human-AI Co-Creation in Songwriting

Cheng-Zhi Anna Huang, Hendrik Vincent Koops, Ed Newton-Rex, Monica Dinculescu, Carrie J Cai. ISMIR, 2020 |

|

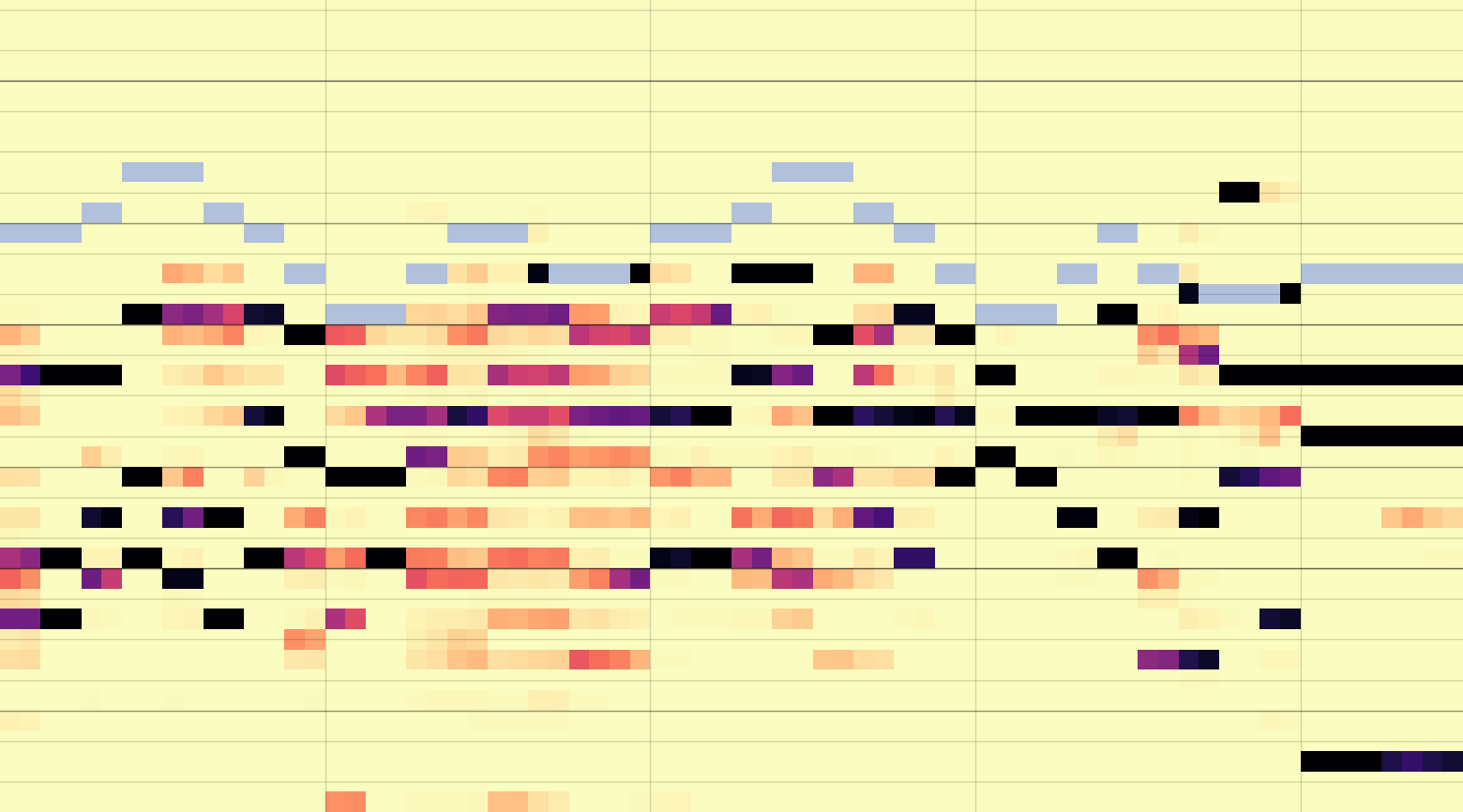

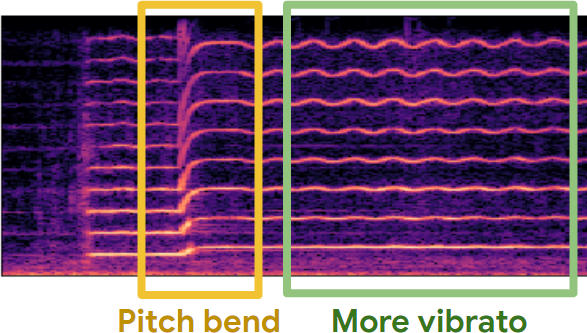

MIDI-DDSP: Detailed Control of Musical Performance via Hierarchical Modeling

Yusong Wu, Ethan Manilow, Yi Deng, Rigel Swavely, Kyle Kastner, Tim Cooijmans, Aaron Courville, Cheng-Zhi Anna Huang, Jesse Engel. ICLR, 2022 Outstanding Paper Award at NeurIPS Workshop CtrlGen: Controllable Generative Modeling in Language and Vision, 2021 |

|

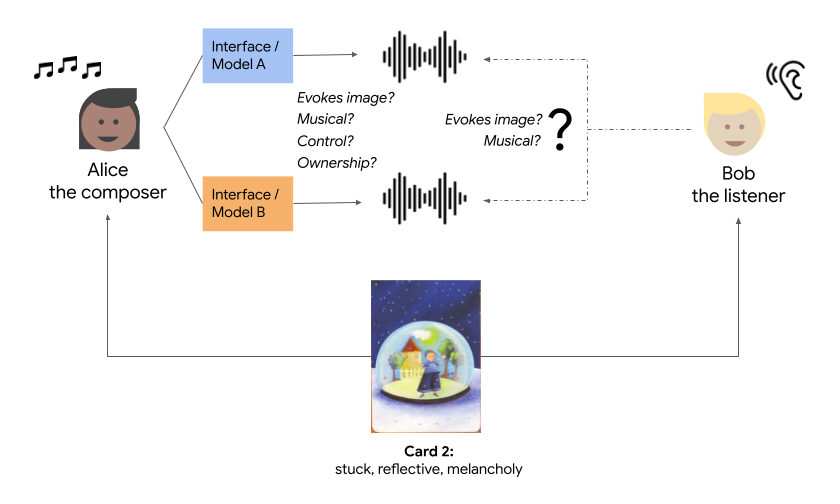

Expressive Communication: A Common Framework for Evaluating Developments in Generative Models and Steering Interfaces

Ryan Louie, Jesse Engel, Cheng-Zhi Anna Huang. IUI, 2022. Blog |

|

Editorial for TISMIR Special Collection: AI and Musical Creativity

Bob LT Sturm, Alexandra L Uitdenbogerd, Hendrik Vincent Koops, Cheng-Zhi Anna Huang. TISMIR, 2021 |

|

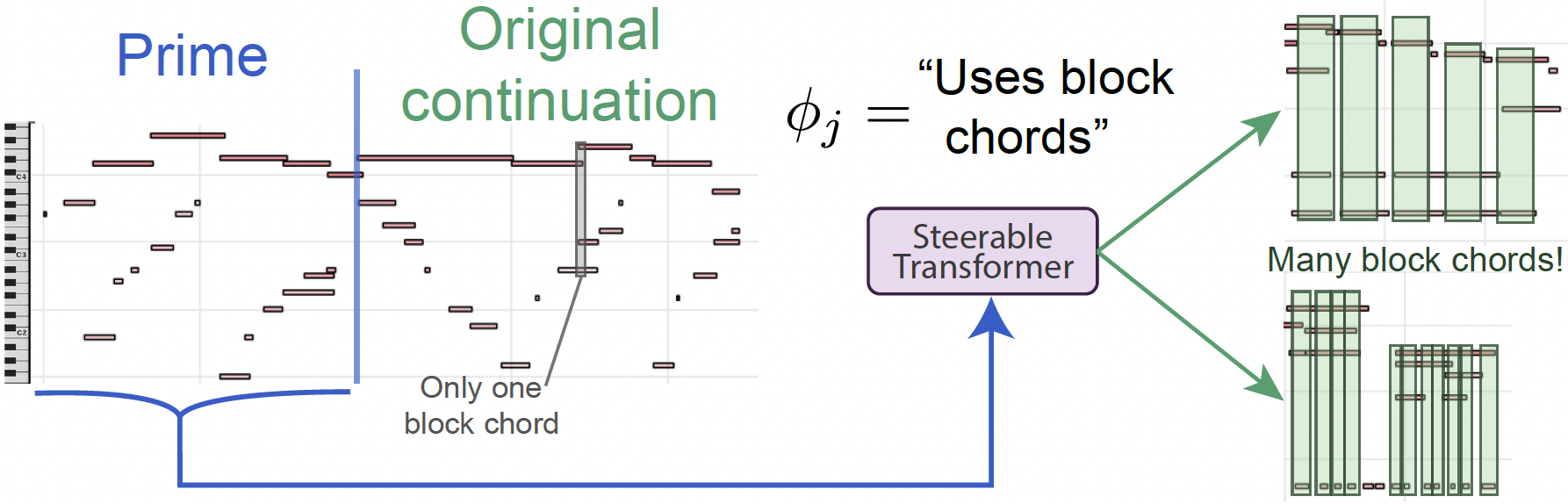

Compositional Steering of Music Transformers

Halley Young, Vincent Dumoulin, Pablo S Castro, Jesse Engel, Cheng-Zhi Anna Huang. HAI-GEN Workshop @ IUI, 2021 |

|

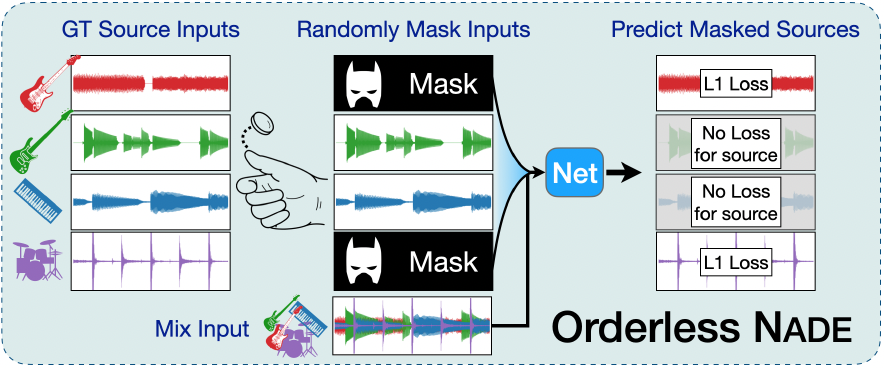

Improving Source Separation by Explicitly Modeling Dependencies Between Sources

Ethan Manilow, Curtis Hawthorne, Cheng-Zhi Anna Huang, Bryan Pardo, Jesse Engel. ICASSP, 2021 |

|

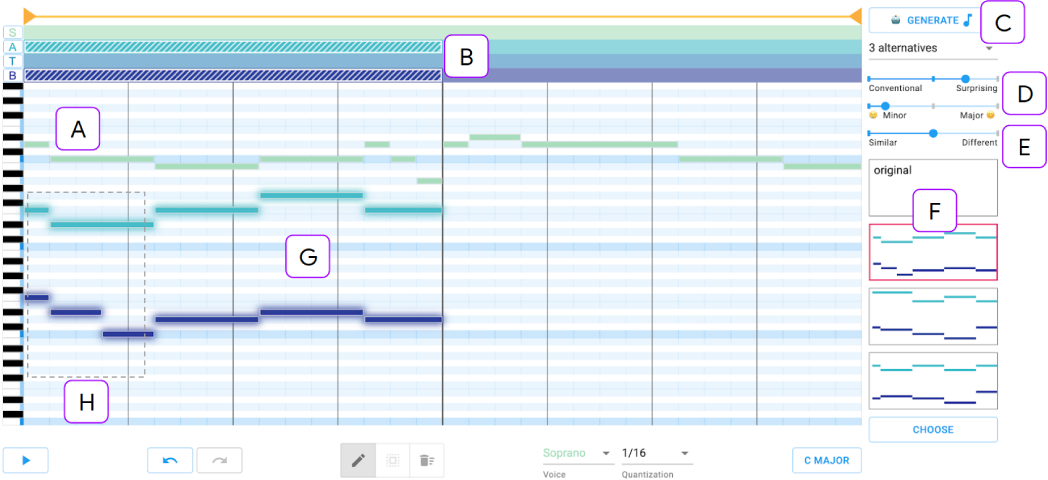

Cococo: Novice-AI Music Co-Creation via AI-Steering Tools for Deep Generative Models

Ryan Louie, Andy Coenen, Cheng-Zhi Anna Huang, Michael Terry, Carrie J Cai. CHI, 2020 |

|

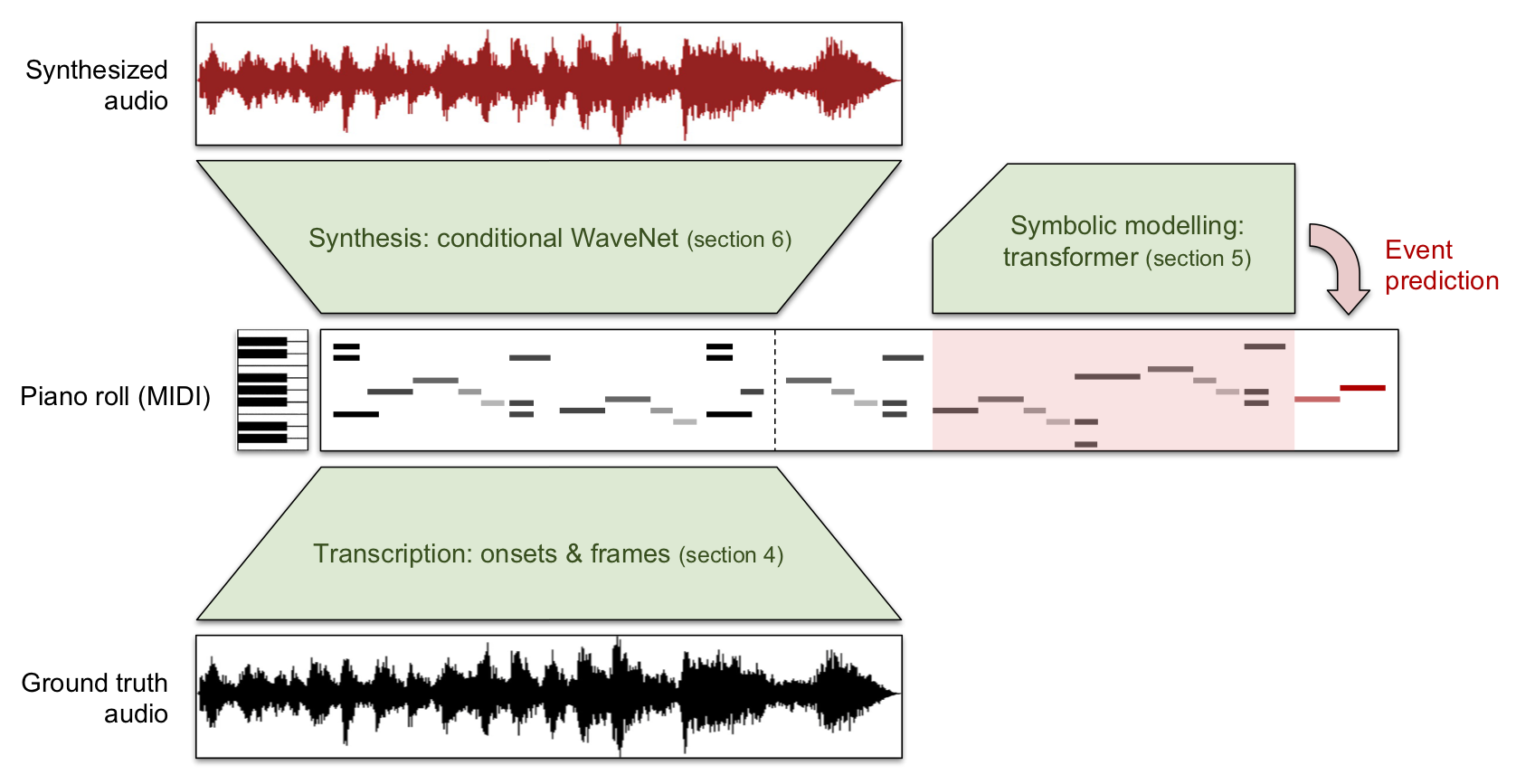

Wave2Midi2Wave: Enabling Factorized Piano Music Modeling and Generation with the MAESTRO Dataset

Curtis Hawthorne, Andriy Stasyuk, Adam Roberts, Ian Simon, Cheng-Zhi Anna Huang, Sander Dieleman, Erich Elsen, Jesse Engel, Douglas Eck. ICLR, 2019 |

|

Infilling Piano Performances

Daphne Ippolito, Cheng-Zhi Anna Huang, Curtis Hawthorne, Douglas Eck. NeurIPS Workshop on Machine Learning for Creativity and Design, 2018 Transformer-NADE for Piano Perofrmances Curtis Hawthorne, Cheng-Zhi Anna Huang, Daphne Ippolito, Douglas Eck. NeurIPS Workshop on Machine Learning for Creativity and Design, 2018 |

|

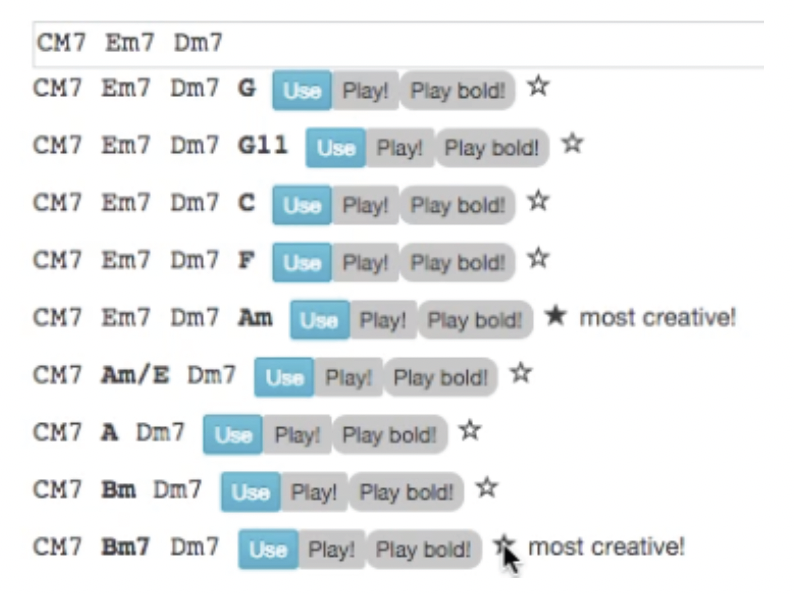

Chordripple: Recommending Chords to Help Novice Composers Go Beyond the Ordinary

Cheng-Zhi Anna Huang, David Duvenaud, Krzysztof Z Gajos. IUI, 2016 |

|

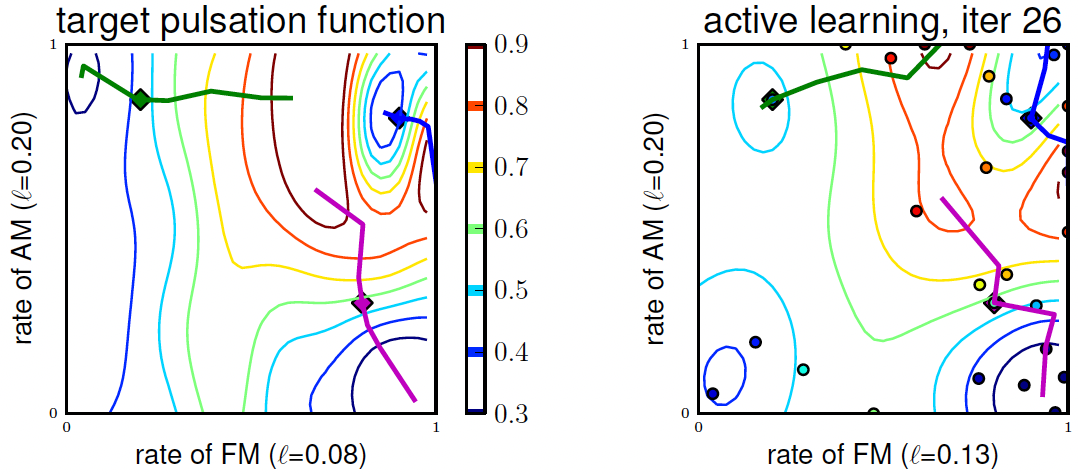

Active Learning of Intuitive Control Knobs for Synthesizers using Gaussian Processes

Cheng-Zhi Anna Huang, David Duvenaud, Kenneth C Arnold, Brenton Partridge, Josiah W Oberholtzer, Krzysztof Z Gajos. IUI, 2014 |

|

Melodic Variations: Toward Cross-Cultural Transformation

Cheng-Zhi Anna Huang. Master's Thesis, MIT Media Lab, 2008 |

|

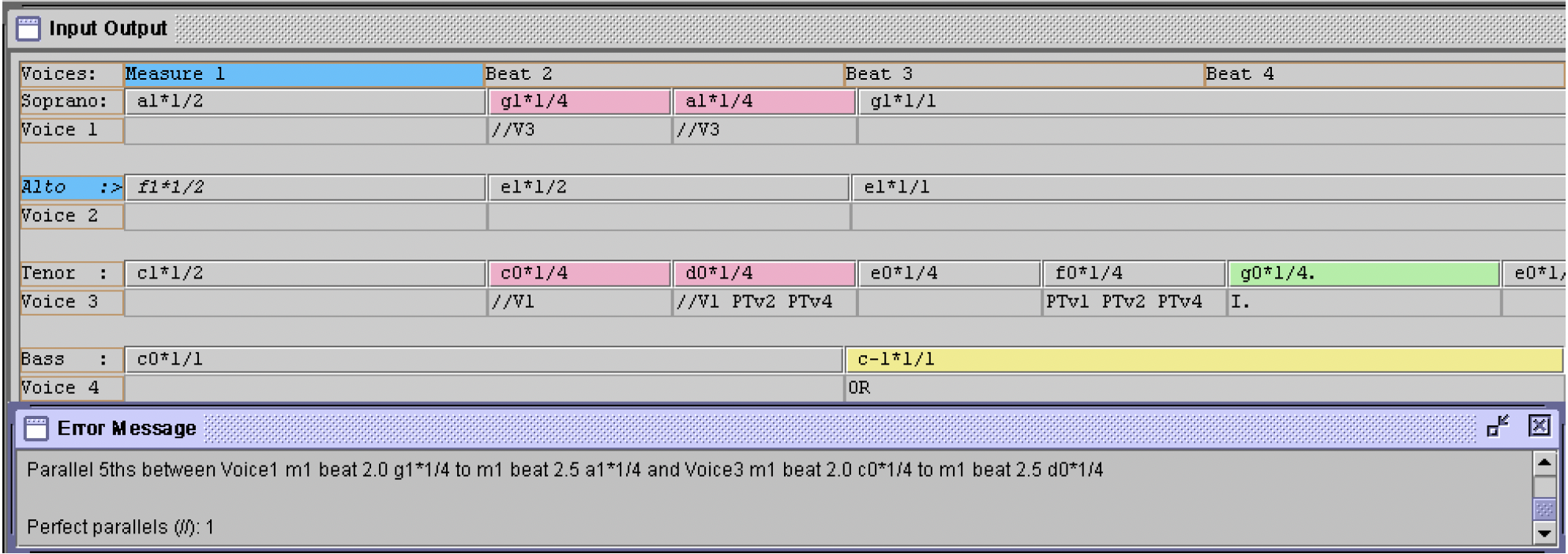

Palestrina Pal: a Grammar Checker for Music Compositions in the Style of Palestrina

Cheng-Zhi Anna Huang, Elaine Chew. Conference on Understanding and Creating Music, 2005 |

Music Compositions

|

Searching gu-zheng, violin, piano. 4'42". 2009 a sight-reading recording, played by Cheng-Zhi Anna Huang, Chad Cannon, Max Hume |

|

|

Fuguenza two clarinets, bass clarinet. 2'10". 2005 performed by Timothy Dodge, Raymond Santos, Andrew Leonard |

|

|

Breathe 8 divisi a cappella. 4'38". 2005 First Prize in San Francisco Choral Artists (SFCA) New Voices Project. Recording by SFCA |

|

|

The Butterfly Effect flute, violin, basson, double bass. 3'50". 2004 performed by Cathy Cho, Yen-Ping Lai, Marat Khusaenov, Brian Marrow |

|

|

Half-awake stereo tape. 4'57". 2009 the track is soft. listen with good headphones if possible. |

|

|

A Lay of Sorrow mezzo soprano, clarinet. 1'29". 2004 performed by Angela Vincente, Andrew Leonard |

|

|

Beautiful Soup mezzo soprano, clarinet. 1'42". 2004 performed by Angela Vincente, Andrew Leonard |

RecruitingWe have multiple Postdoc positions open for Fall 2025 at MIT Music Technology. I'm particularly interested in thinking about multi-agent reinforcement learning, human-ai communication, interpretability, and visualization, in the context of designing interactive systems that enable musicians to jam with generative agents. For PhD, apply through MIT EECS (by Dec 1st). For indicating "research field of interest" in the application form, if we don't have music technology included in the dropdown menu yet, you can choose any field you're most interested in, such as (but not limited to) ML General Interests, Natural Language and Speech Processing, Reinforcement Learning, Deep Learning, Human Computer Interaction, or Cognitive AI, etc. We'll reach out if additional information is needed. You'll probably hear back from MIT in February. Best of luck! |

|

This website was built thanks to the help of this source code. |